In the last post, we discussed NSX-T Logical Routing, and before We start creating T0 and T1 Routers (Gateways) in NSX-T. Let’s discuss NSX-T Logical Switching.

NSX-T Switching benefits include but are not limited to flexible scalability across multi-tenancy data centers, the ability to use existing hardware and stretching Layer 2 over Layer 3 networks.

Logical switching involves several concepts including Segments, Segment Ports and Uplinks. These are defined on the Transport Nodes. Segments will flow traffic over an Overlay or vLAN backed vDS Switch. The use of Segment Profiles allows for common configurations to be applied.

Few concepts that I did explain in earlier posts, I included here as well to understand the flow,

Tunnelling (GENEVE Protocol)

Traffic from one Hypervisor (ESXi/KVM Transport Nodes) to another happens through Tunnelling or Overlay protocol which means that the traffic leaves the VM as a Layer 2 frame. The Logical Switch will transfer the Layer 2 frame, see that the destination is a different Hypervisor and send over the tunnel. The Overlay protocol used in NSX-T is Geneve which provides encapsulation of data packets.

The Geneve encapsulated packets are communicated in the following ways:

- The source TEP encapsulates the VM as a frame in the Geneve header

- The encapsulated UDP packet is transmitted to the destination TEP over port 6081

- The destination TEP decapsulate the Geneve header and delivers the source frame to the destination VM

Tunnel encapsulation protocol. Used to be called VXLAN. Each broadcast domain is implemented by tunnelling VM-to-VM and VM-to-Logical Router traffic. Runs on UDP, adds an 8-byte UDP header, uses a 24-bit VNI to identify the segment

What’s Virtual Network Identifier (VNI)

An NSX-T Data Center Logical Switch reproduces switching functionality, Broadcast, Unknown Unicast, Multicast (BUM) traffic, in a virtual environment completely decoupled from the underlying hardware. Each Logical Switch has a Virtual Network Identifier (VNI), like a vLAN ID.

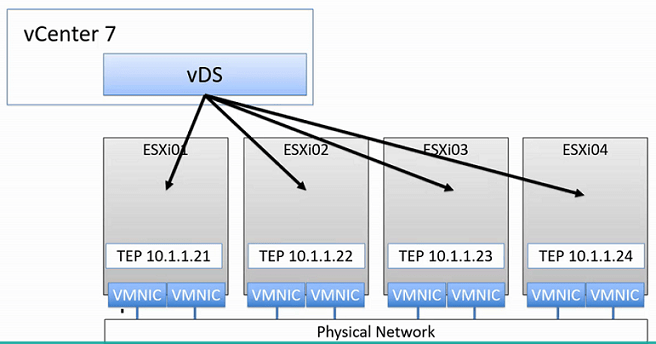

TEP – Tunnel End Point

- Tunnel endpoints enable hypervisor hots to participate in an NSX-T network overlay

- The NSX-T overlay deploys a Layer 2 network over an existing physical network fabric by encapsulating frames inside of packets and transferring the encapsulating packets over the underlying transport network

- The underlying transport network can consist of either L2 or L3 networks. The TEP is the connection point at which encapsulating and decapsulating takes place

Segments (Logical Switch)

- Segments are Virtual Layer 2 domains. A segment was earlier called a logical switch

- Overlay Segments

- vLAN-backed Segments

-

Defined by VNI Numbers, similar to a vLAN tag ID

A vLAN-backed segment is a layer 2 broadcast domain that is implemented as a traditional vLAN in the physical infrastructure. This means that traffic between two VMs on two different hosts but attached to the same vLAN-backed segment is carried over a vLAN between the two hosts. The resulting constraint is that you must provide an appropriate vLAN in the physical infrastructure for those two VMs to communicate at layer 2 over a vLAN-backed segment

In an overlay-backed segment, traffic between two VMs on different hosts but attached to the same overlay segment have their layer 2 traffic carried by a tunnel between the hosts. NSX-T Data Center instantiates and maintains this IP tunnel without the need for any segment-specific configuration in the physical infrastructure. As a result, the virtual network infrastructure is decoupled from the physical network infrastructure. That is, you can create segments dynamically without any configuration of the physical network infrastructure

The default number of MAC addresses learned on an overlay-backed segment is 2048. The default MAC limit per segment can be changed through the API field “remote_overlay_mac_limit in MacLearningSpec’.

When creating a Segment, you can define a Gateway Address, what upstream Router connecting to, define a DHCP range if required. When creating a Logical Switch in the manager, you’re only creating a Layer 2 domain. So you can see the Segments are powerful and more advanced than a Logical Switch. A Segment is really an advanced Logical Switch.

- Layer 2 Segments can be created without connecting to Tier 0/1 Routers (Gateways). VMs associated with the same Segment still can communicate with each other

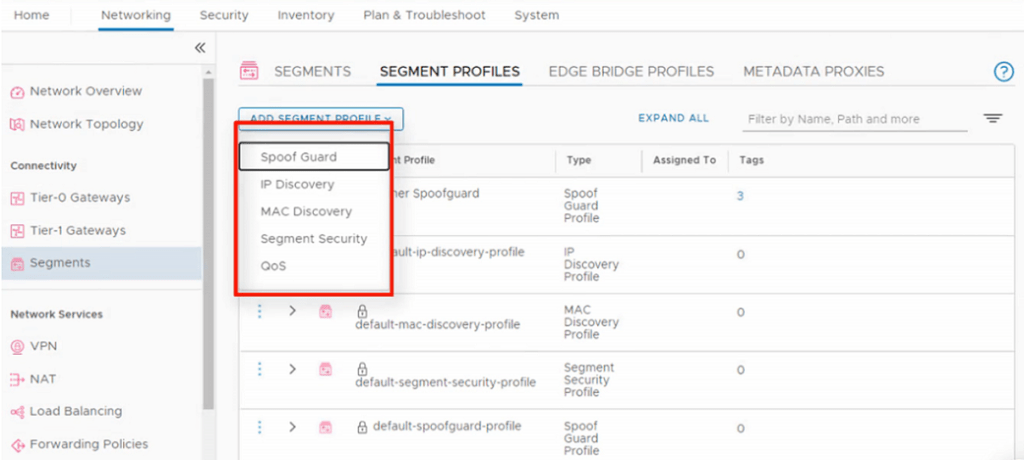

Segment Profiles

Segment profiles provide Layer 2 networking configurations for Segments and Ports. There are various types of choices when creating a Segment Profile

- Spoof Guard

- IP Discovery – Learns the VM MAC and IP addresses

- MAC Discovery – Supports MAC learning and MAC address change

- Segment Security – Provides stateless layer 2 and layer 3 security

- QoS – Provides high quality and dedicated network performance for traffic

These default profiles are not editable.

To understand Segment Profiles further refer to the link from VMware,

Networking – Connectivity – Segments – Segment Profiles

Logical Switching Packet Forwarding

NSX Controller maintains a set of tables the is used to identify data plane component associations and for traffic forwarding. These are;

- TEP Table – Maintains VNI-to-TEP IP bindings

- ARP Table – Maintains VM MAC to VM IP mapping

- MAC Table – Maintains the VM MAC address to TEP IP mapping

TEP Table Update

- When a VM is powered-on within a segment, the VNI-to-TEP mapping is registered on the transport node in its local TEP table

- Each transport node updates the central control plane about the learned VNI-to-TEP IP mapping

- Central Control Plane maintains the consolidated entries of VNI-to-TEP IP mappings

- The central control plan sends the updated TEP table to all the transport nodes where VNI is realised

MAC Table Update

- The VM MAC-to-TEP IP mapping is registered on the transport nodes in its local MAC address table

- Each transport note updates the central control plane about the learned VM MAC-to-TEP IP mapping

- The central control plane maintains the consolidated entries of VM MAC-to-TEP IP mappings

- The central control plane sends out the updated MAC table to all transport notes where the VNI is realised

ARP Table Update

- The local VM IP-to-MAC mapping is registered on each transport node in its ARP local table

- Each transport node sends known VM IP-to-MAC mappings to the central control plane

- The central control plane updates its ARP table based on the VM IP-to-MAC received from the transport nodes

- The central control plane sends the updated ARP table to all the transport nodes

The ARP tables in both the central control plane and transport nodes are flushed after 10 minutes

Flooding Traffic

- NSX-T segment behaves like a LAN, providing the capability of flooding traffic to all the devices attached to this segment

- NSX-T does not differentiate between the different kinds of frames replicated to multiple destinations. Broadcast, unknown unicast, or multicast traffic will be flooded in a similar fashion across a segment

- In the overlay model, the replication of a frame to be flooded on a segment is orchestrated by the different NSX-T components

Head-End Replication Mode – The frame is flooded to every transport node connected to the segment. Sending packets across all available links

Hierarchical Two-Tier Replication – (Recommend best practice, performs better in terms of physical uplink bandwidth utilisation)

Transport nodes are grouped according to the subnet of the IP address of their TEP

vSphere 7, vDS, and N-VDS (Integration)

- Since VMware vSphere 7.x N-VDS is depreciated, but still, N-VDS remains on KVM and Bare Metal Transport Nodes

- Therefore, these Layer 2 Segments will reside within the vDS Switch along with the Distributed Port Groups

* Screenshots captured from VMware vCenter Console and NSX-T Manager Console on Jan 07, 2022.