NSX-T 3.x High-Level Architecture

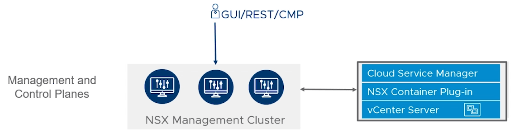

The three main elements of NSX-T Data Center Architecture are the Management, Control and Data Plans. This architectural separation enables scalability without impacting workloads.

Management, and Control Planes

Each node on the Management and Control Planes includes Policy, Management, and Control functions;

- The Three Node NSX Management Cluster provides High Availability and Scalability (Single Node implementation also can be performed for POC and Lab Testing, not recommended for Production Deployment)

- The UI or API interacts with Users, Automation, and Cloud Management Platforms (CMPs)

- NSX Policy configures Networking and Security functions

- The desired configuration is validated and replicated across all Nodes

- The dynamic state is maintained and propagated across all Nodes

- Management Plane – NSX-T UI / API, Integrate with Cloud Management Platforms (Can push the desired configuration via CMPs), Configuration Changes

- Control Plane – Performances, Track dynamic state of NSX-T (Logical Switching / Routing, Distributed Firewall, VMs’ State, etc.)

- Policy Manager – GUI / API

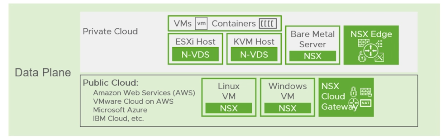

Data Plane

The Data Plane has several components and functions;

- Includes multiple Endpoints (ESXi and KVM Hosts and NSX-T Edge Nodes)

- Contains various workloads such as VMs, Containers and Applications running on Bare-metal Servers

- Forward Data Plane Traffic

- Uses a scale-out distributed forwarding model

- Implements Logical Switching, Distributed and Centralized Routing and Firewall filtering

Data Plane – Multiple Endpoints (VMs / Containers), NSX-T Edge Nodes (NAT, Distributed Firewall, Load Balancer), Traffic Flow, etc.

VMware NSX-V vs NSX-T Comparison,

This is a comparison between NSX-V and NSX-T versions. NSX-V is no longer available in the market. But included here to have an idea.

- NSX-V is managed using VMware vCenter-based Tools and NSX-T is decoupled from the vCenter (You can still add vCenter as Compute Manager in NSX-T)

- NSX-T supports ESXi, KVM, Bare-metal Servers, Kubernetes, OpenShift, AWS, and Azure (Cross-Platform Support) while NSX-V was only supported VMware vSphere

- Networking Solution for AWS Outposts

NSX-V vs. NSX-T, Installation Comparison

- NSX-V has NSX Manager registered with vCenter and NSX-T is a Standalone Solution but can point to vCenter to register Hosts

- NSX-V has separate appliances for NSX Manager and the NSX Controller and from NSX-T 2.4 onwards include both Manager and Controller in the same Virtual Appliance

- NSX-V uses VXLAN as an Overlay and NSX-T uses Genève as an Overlay

- NSX-V Logical Switches use vSphere Distributed Switches (vDS) and N-VDS is decoupled from VMware Center (From VMware vSphere 7.0 (Distributed Switch 7.0 comes with VMware vSphere 7.0) again VMware moving from N-VDS to vDS)

For more information about N-VDS vs vDS follow the KB Article from VMware,

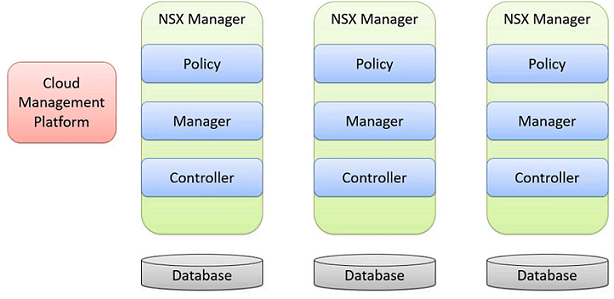

NSX-T Manager Architecture (NSX-T Management Cluster), Policy, Manager and Controller Roles

- NSX Manager is an appliance with built-in roles, Policy, Manager and Controller Roles. The Management Plane includes the Policy and the Manager Roles and The Central Control Plane includes the Control Role

- The NSX Management Cluster is formed by a group of three Manager Nodes

- The desired state is replicated in the distributed persistent database, providing the same configuration view to all nodes in the cluster

- NSX Manager Appliance is available in different sizes for different deployment scenarios

NSX Manager VM and Host Transport Node System Requirements (VMware NSX-T Data Center 3.1)

Benefits of NSX-T Management Cluster

- High Availability and Scalability of all services, including GUI and API

- Simplified installation and upgrade

- Easier operations with fewer VMs to monitor and maintain

- Support for compute managers such as VMware vCenter

- Operational Tools and APIs provided for handling management tasks and user queries of system states

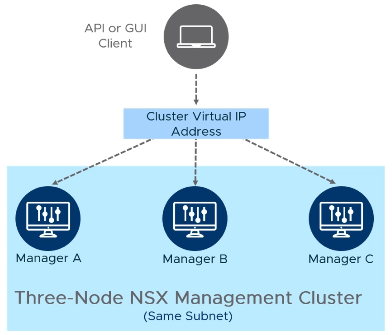

NSX-T Management Cluster with Virtual IP Address

The NSX Management Cluster is highly available and It’s configured in the following way;

- All NSX Manager Nodes must be on the same Subnet (Network)

- One Manager Node is elected as the leader and the cluster’s Virtual IP is attached to the leader manager

- The cluster virtual IP address is used for traffic destined for NSX Manager nodes, traffic destined for any Transport Node (ESXi or KVM Nodes) uses the management IP of the nodes

- A single virtual IP address is used for API and GUI client access

NSX-T Manager (Management Cluster) Installation Facts;

- NSX-T Manager installation will deploy 3x VM Appliances in Cluster to provide HA (For Proof-of-Concept and Lab scenarios single VM Appliance can be used). These 3x VMs should distribute among different nodes and data stores to have Physical Node or Storage failure HA. If the Cluster supports DRS, DRS Anti-Affinity Rule must apply to NSX Manager Nodes to distribute the VMs among different ESXi Nodes

- All NSX-T Manager Nodes must be in the same subnet if not behind a Load Balancer to have Layer-2 Adjacency (Recommend deployment is the same Subnet as vCenter and ESXi Host or KVM Hosts Management Subnet)

- If the NSX-T Manager Nodes behind the External Load Balancer can be spread across different Subnets since Virtual IP (VIP) is handled by the Load Balancer (This is to provide Load Balancing across 3x VMs and to have NSX-T Manager across different networks). Supported latency should be considered when spreading the Manager Nodes across different Subnets. In this scenario All nodes are active, GUI and API are available on all Managers, Traffic to the Virtual IP Address is Load Balanced to multiple Manager Nodes.

NSX-T Manager Functions (Manager Role)

NSX-T Manager performs several functions;

- Receives and validates the configuration from NSX-T Policy

- Stores the configuration in the distributed persistent database called CorfuDB

- Publishes the configuration to the Central Control Plane

- Installs and prepare the Data Plane components

- Retrieves the statistical data from Data Plane components

NSX-T Policy and NSX-T Manager Workflow (Manager and Policy Roles)

The components of the NSX-T Manage Node interact with each other (Manager, Policy and Control Plane)

- Reverse Proxy is the entry point to NSX-T Manager

- The Policy Role handles all Networking and Security Policies and enforces them in the Manager role

- Proton is the core component of the NSX-T Manager role. It’s responsible for managing various functionalities such as Logical Switching, Logical Routing, Distributed Firewall, etc.

- Both NSX-T Policy and Proton persist data in CorfuDB